Google BigQuery

Integrate Query with Google Cloud Platform's (GCP's) BigQuery - the serverless data warehouse

TL;DRTo integrate GCP BigQuery Query:

- Create a GCP Service Account with permissions to use BigQuery and generate a JSON Key.

- Add a platform connection in Query using your Service Account Key, Dataset and Table.

- Use the Configure Schema workflow to introspect and map your data into the Query Data Model.

- Use Query Federated Search to surface any number of indicators, assets, findings, alerts, and anything else stored in your BigQuery Datasets by entities, events, and objects.

Overview

Google Cloud Platform (GCP) BigQuery is a fully-managed, serverless Enterprise Data Warehouse (EDW) that enables scalable analysis over petabytes of data. You can execute SQL queries against massive datasets with rapid execution times. BigQuery handles the infrastructure, providing you with a powerful analytics engine that can pull insights from vast amounts of data with minimal management required. Security and IT teams typically leverage BigQuery's massive scale and performance to store event logs, archival data, asset information, and more.

Within the Query Federated Search Platform, BigQuery is considered a dynamic schema platform that can contain any number of source data within it. For that purpose, Query provides a Configure Schema no-code workflow to allow users to easily introspect, auto-discover ingestion and time-based partitions in the tables, and map the source data into the Query Data Model (QDM). This allows you to model nearly any logging or event data, or asset data, stored within a BigQuery dataset and table.

Prerequisites

The Query integration with BigQuery relies on the usage of GCP IAM Service Accounts with the proper permissions granted to them by assigned roles. You can use an existing Service Account and generate a Key for it, or proceed to the following sections for a guided walkthrough of creating a Role, Service Account, and Key.

Create GCP IAM Role for BigQuery

In this section you will create an IAM Role in GCP to provide minimum necessary permissions to BigQuery to your the Service Account you will create in later steps. All steps should be executed from the Project that houses your BigQuery Datasets with the Tables you will connect with Query.

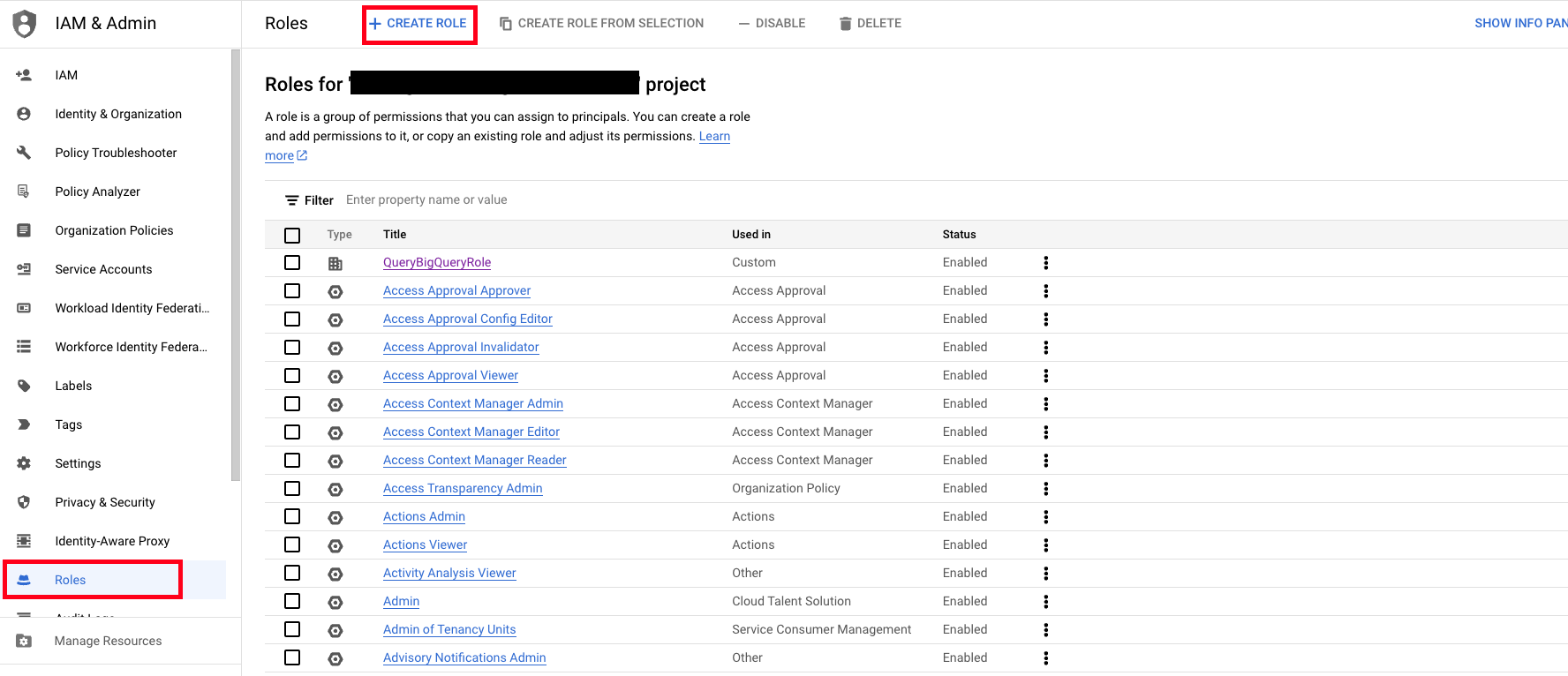

- In the GCP Console, navigate to the IAM & Admin console, select Roles in the left-hand navigation pane and then select Create Role as shown below (FIG. 1).

FIG. 1 - Preparing to create an IAM Role in the GCP Roles console

- Populate the following values for your new Role.

- Title: You can name it whatever you want, for the purposes of the documentation, it is named

QueryBigQueryRole. - Description: Provide a description.

- ID: This can be the same as the Title, in this instance it is

QueryBigQueryRole. - Role launch stage:

General Availability.

- Title: You can name it whatever you want, for the purposes of the documentation, it is named

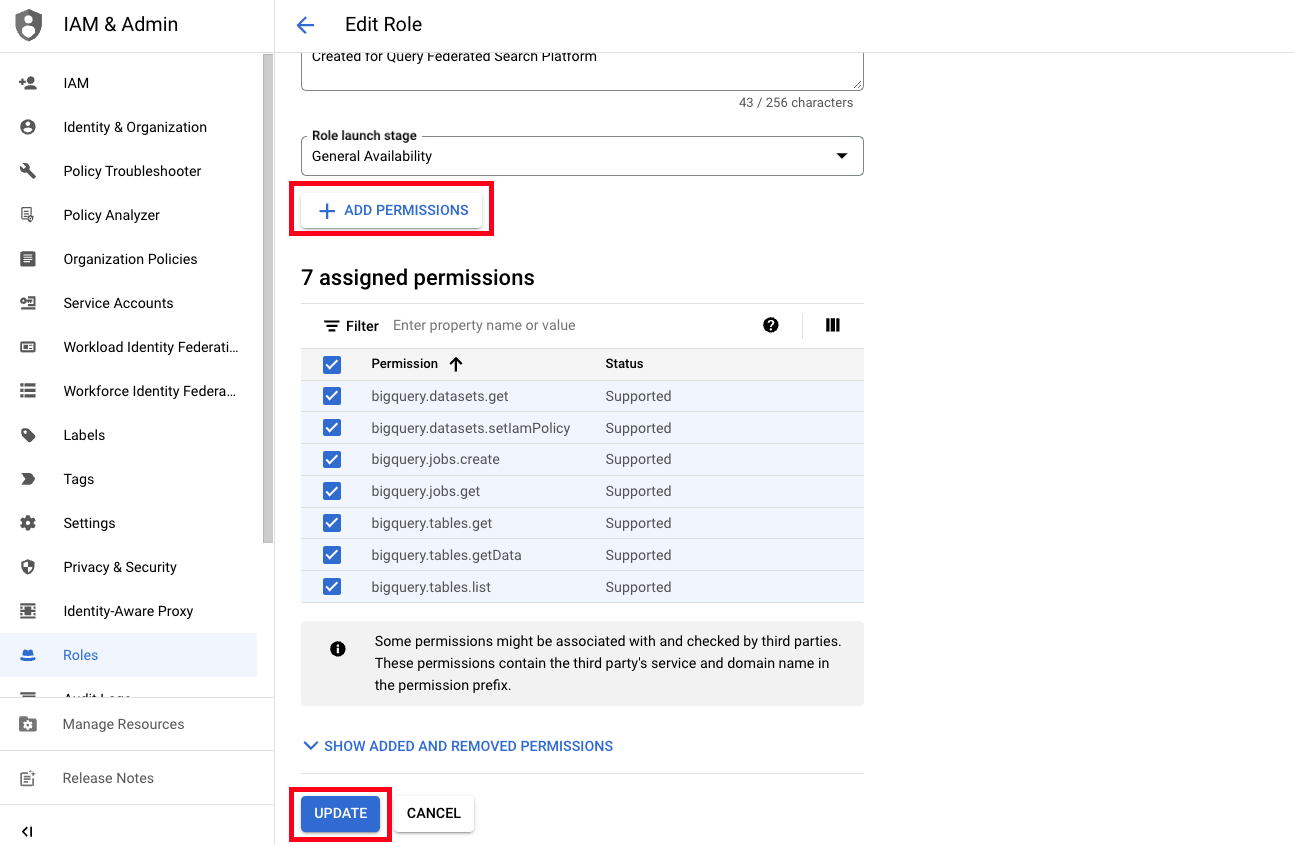

- Select Add Permissions and add the below permissions to your GCP IAM Role and then select Create (or Update if it is an existing Role) as shown below (FIG. 2).

bigquery.datasets.getbigquery.datasets.setIamPolicybigquery.jobs.createbigquery.jobs.getbigquery.tables.getbigquery.tables.getDatabigquery.tables.list

FIG 2. - Assigning permissions to a GCP IAM Role and Creating it

In the next section you will create a GCP IAM Service Account, assign the Role you created, and generate Access Keys.

Create GCP IAM Service Account, Assign Role, and generate Access Keys

In this section you will create a new GCP IAM Service Account and assign the Role you created in the previous section to it and then generate Access Keys for the Service Account.

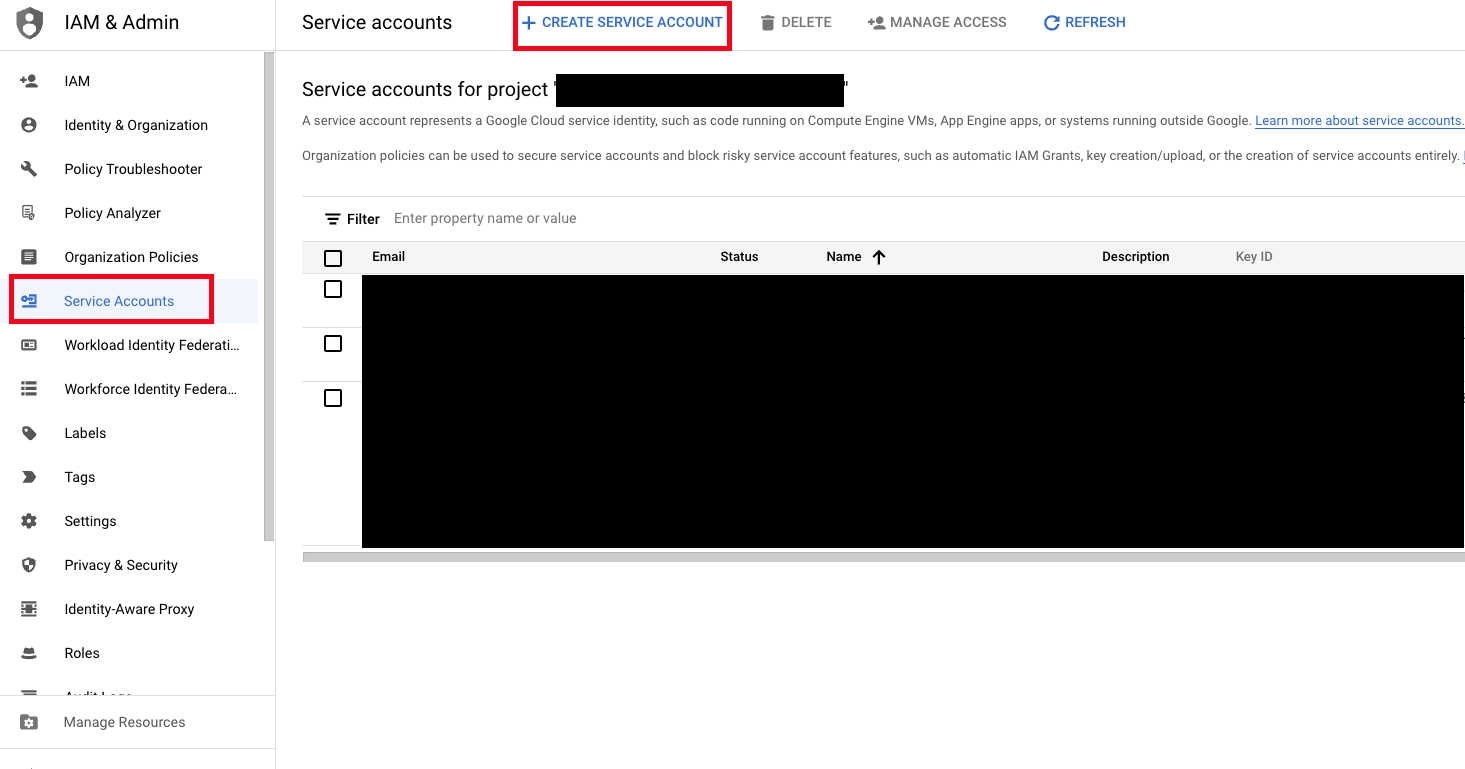

- While staying in the IAM & Admin Console, navigate to the Service Accounts section and select Create Service Account as shown below (FIG. 3).

FIG. 3 - Preparing to create a Service Account in the GCP Service Accounts console

-

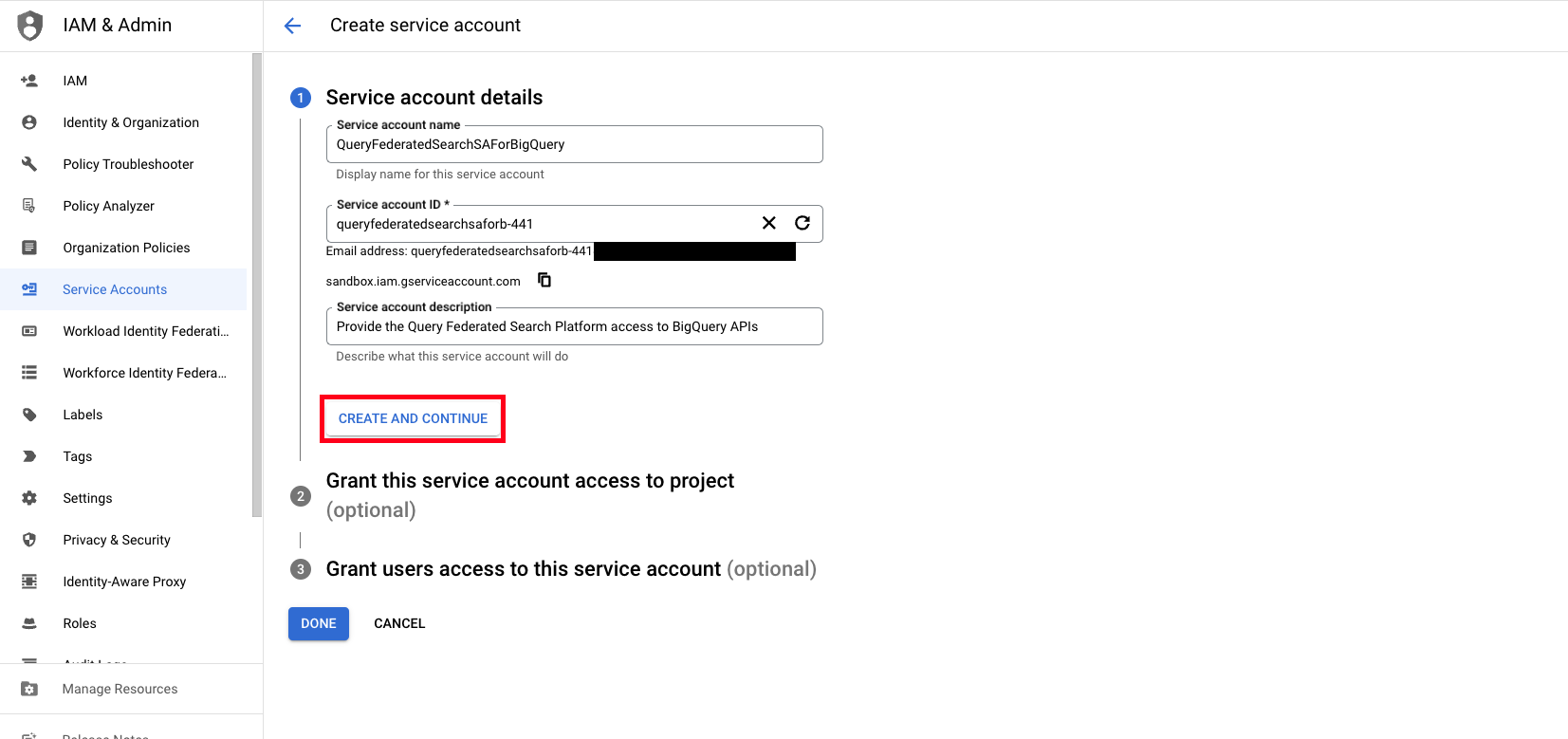

Populate the following values for your new Service Account and then select Create and Continue as shown below (FIG. 4).

- Service account name: Provide a display name for the Service Account, for the purposes of the documentation, it is named

QueryFederatedSearchSAForBigQuery. - Service account ID: This value will be automatically generated based on the value of Service account name, you can optionally override this value.

- Description: Provide a description.

- Service account name: Provide a display name for the Service Account, for the purposes of the documentation, it is named

FIG. 4 - Create a GCP Service Account

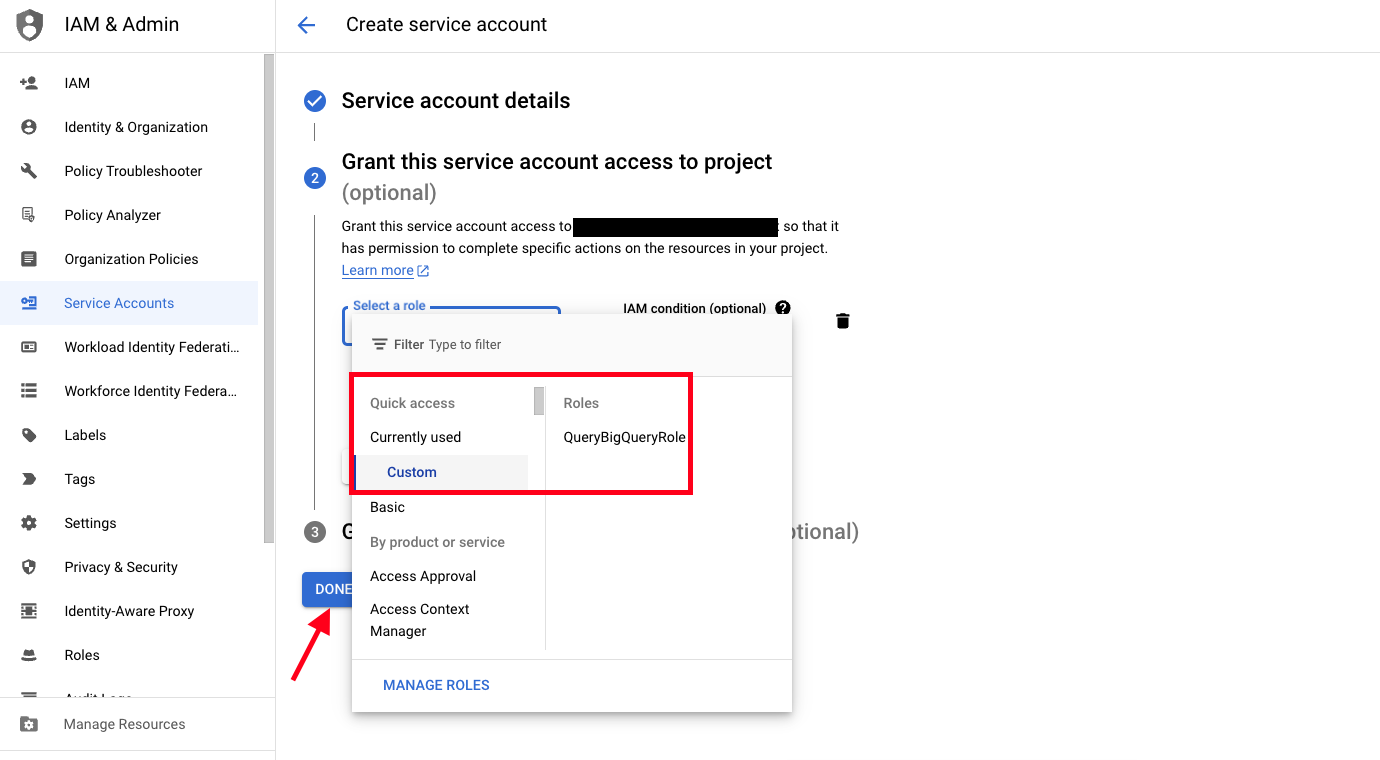

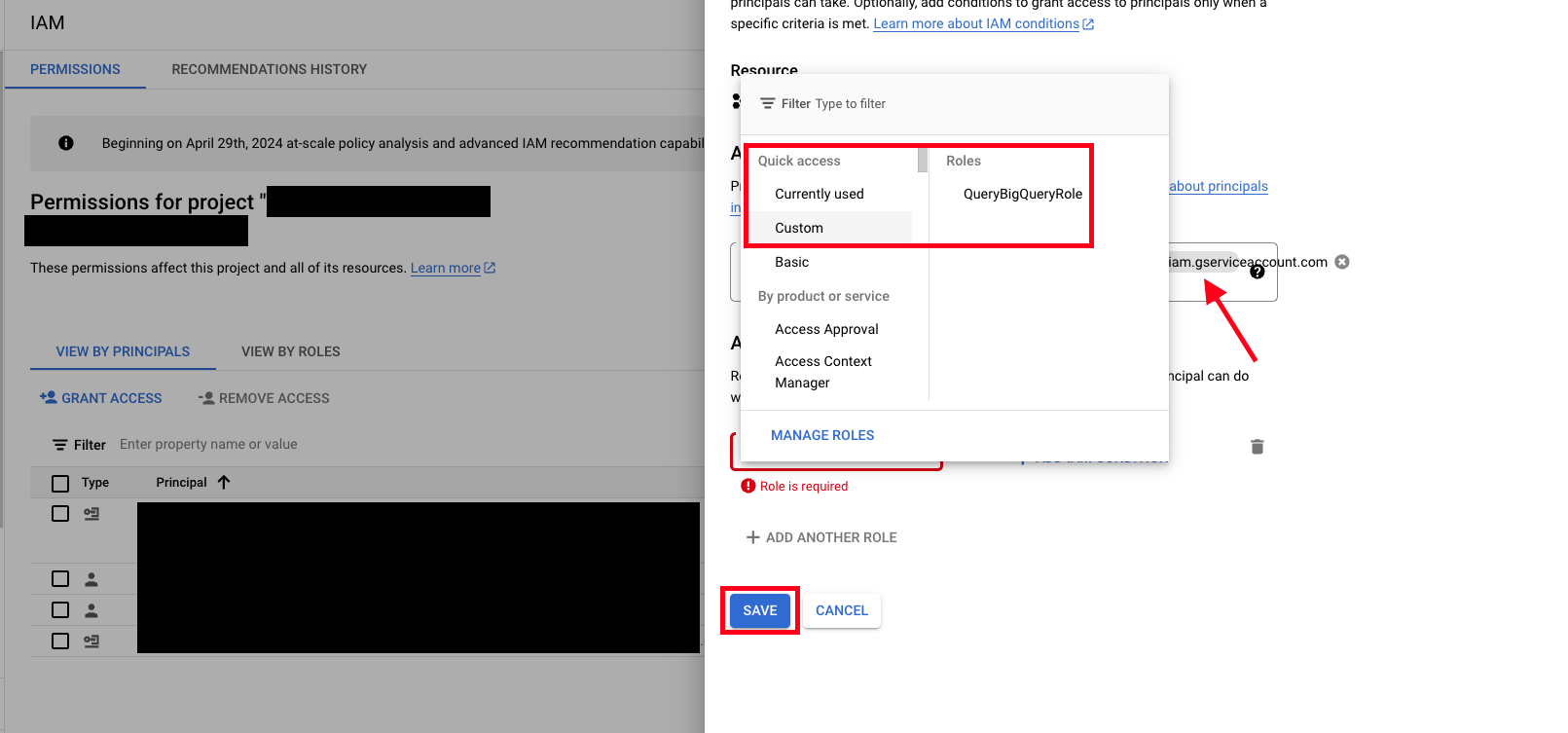

- Select the Select a role dropdown, select Custom, and then select the IAM Role you created in the previous section - in this case it was named

QueryBigQueryRoleand then select Done as shown below (FIG. 5).

FIG. 5 - Assign the custom IAM Role to the GCP Service Account and finalize creation

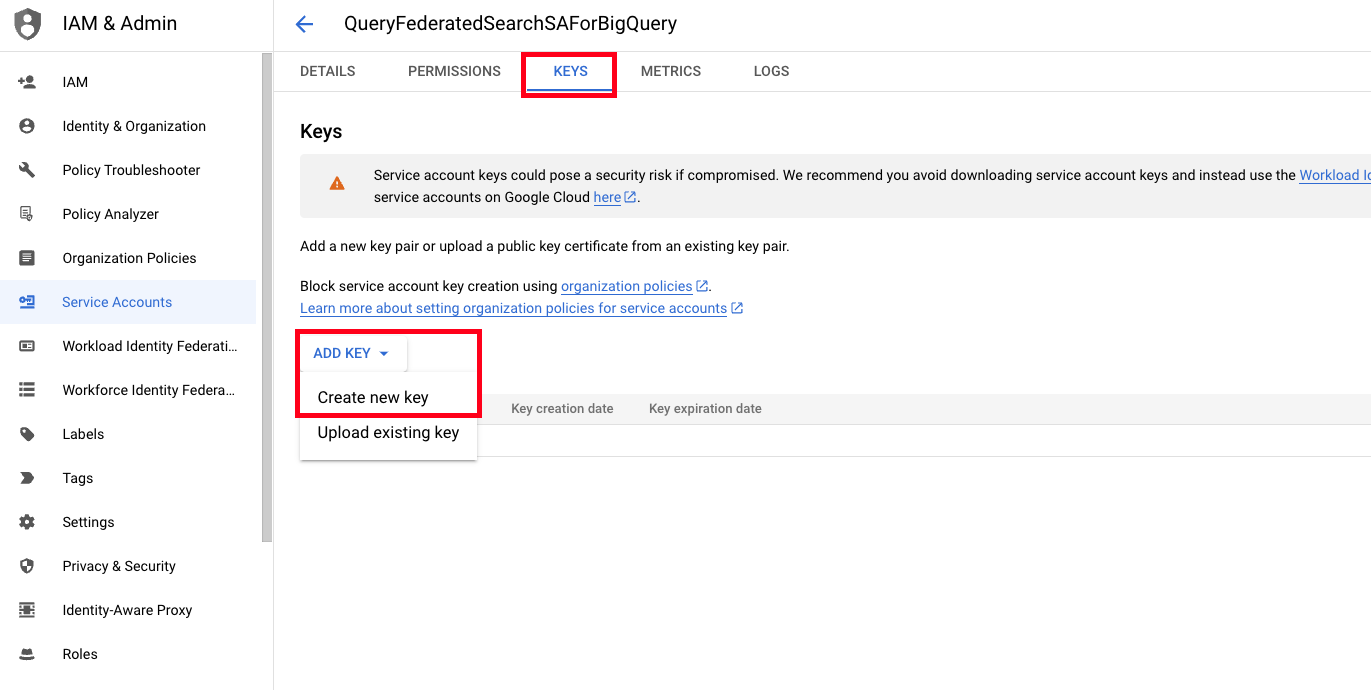

- Select the Service Account you created, select the KEYS tab, select ADD KEY, and then select Create new key as shown below (FIG. 6).

FIG. 6 - Generating a new Service Account

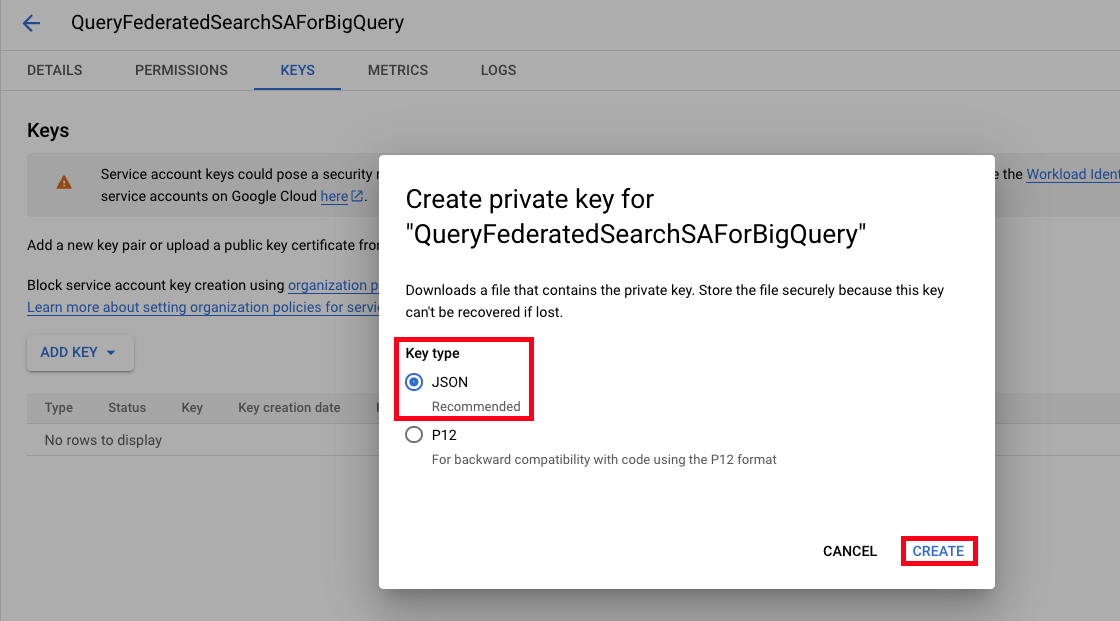

- In the Create private key for (service_account) screen, select JSON under

Key type, and then choose Create as shown below (FIG. 7).

FIG. 7 - Creating a JSON private key for a GCP Service Account

The JSON file containing your Service Account's credentials will automatically downloaded. Ensure you secure this file on your file system and keep the location handy as you will require it to create a Platform Connector for BigQuery.

If you have multiple BigQuery deployments in different GCP Projects and wish to use the same Service Account for all Projects, proceed to the next optional section, otherwise proceed to the Configure a Google BigQuery Connector section.

(OPTIONAL) Grant Service Account access to other GCP Projects

If you wish to grant the Service Account access to multiple GCP Projects for the purpose of minimizing the amount of Service Accounts and Access Keys, repeat the steps within this section for each Project you want to extend the access of your Service Account into.

You will need to copy the Service Account email to grant the access, additionally, you will need to repeat the steps in the Create GCP IAM Role for BigQuery section to provide the custom IAM Role to each Project.

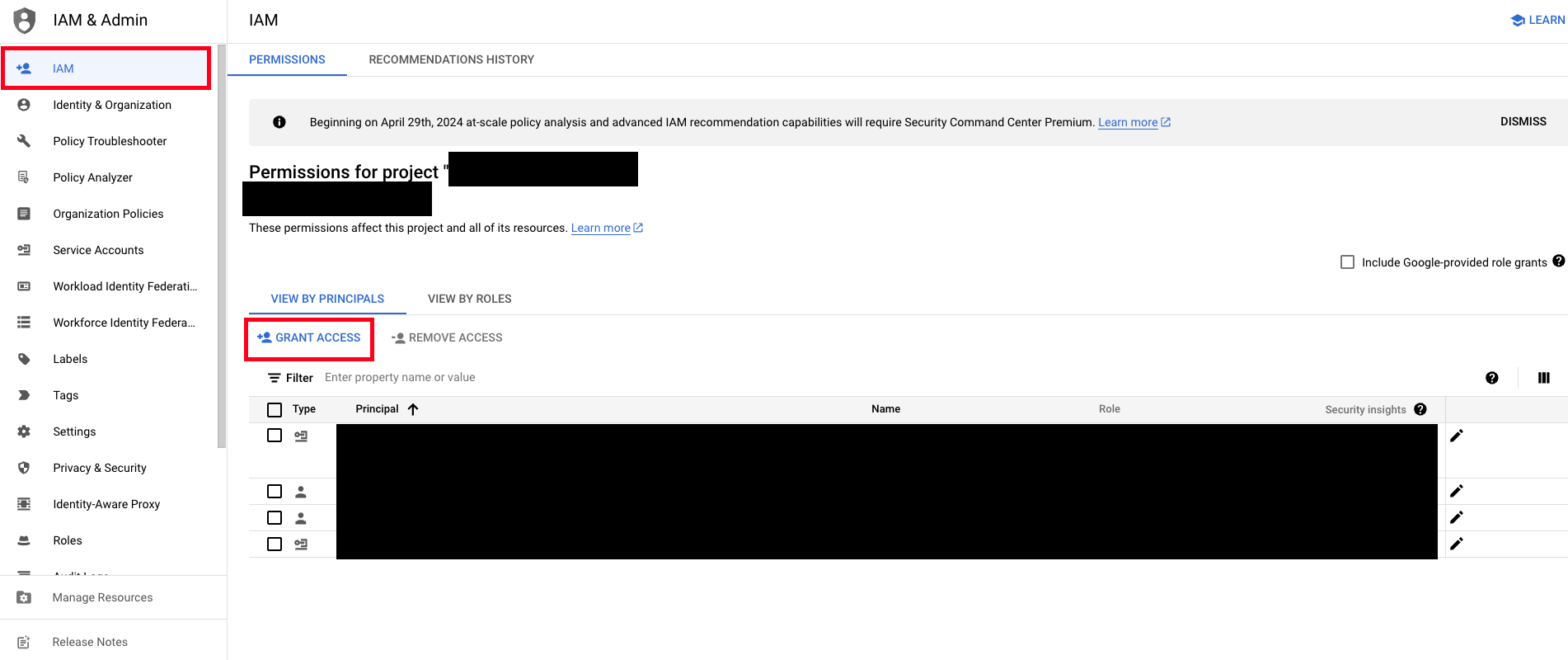

- In the IAM & Admin Console from another Project you have

Owneraccess in, select IAM and then select Grant Access as shown below (FIG. 8).

FIG. 8 - Granting a GCP Project access to a new principal

- In the New principals section, add your Service Account Email.

- Select the Select a role dropdown, select Custom, and then select the IAM Role you created in the Create GCP IAM Role for BigQuery section - in this case it was named

QueryBigQueryRoleand then select Save as shown below (FIG. 9).

FIG. 9 - Granting a Role and permission to a Service Account into a new GCP Project

Repeat these steps for however many GCP Accounts you wish to provide access into. Consider provisioning this access with a script or Infrastructure-as-Code to centrally manage, increase, and/or rollback this access as required.

Configure a Google BigQuery Connector

The Google BigQuery Connector is a dynamic schema platform configuration. Static schemas are platforms in which the Query team pre-configures the type of data normalization that happens and a dynamic schema platform gives the user control for mapping and normalizing data into the Query Data Model. For dynamic schemas, Query provides a no-code data mapping workflow to allow you to map your source data into the Query Data Model. For more information, see the Configure Schema and the Normalization and the Query Data Model sections, respectively.

To connect to your BigQuery tables, Query requires your Project ID, the Dataset name that contains your Tables, the Table name, and the contents of your Service Account JSON access key. Query stores these credentials in AWS Secrets Manager encrypted with an AWS Key Management Service (KMS) Customer Managed Key dedicated to your Query tenant. Every action within Query's backend requires with a policy-as-code agent - Open Policy Agent - and the only principal able to access the Secret and its KMS CMK is a dedicated AWS IAM Role that powers the infrastructure used for the Google BigQuery Connector dedicated to your tenant.

Configure Schema LimitationsDue to limitations in the introspection and mapping process, you may only map one BigQuery table per Connector. There are not any limits to the amount of Connectors you can create.

-

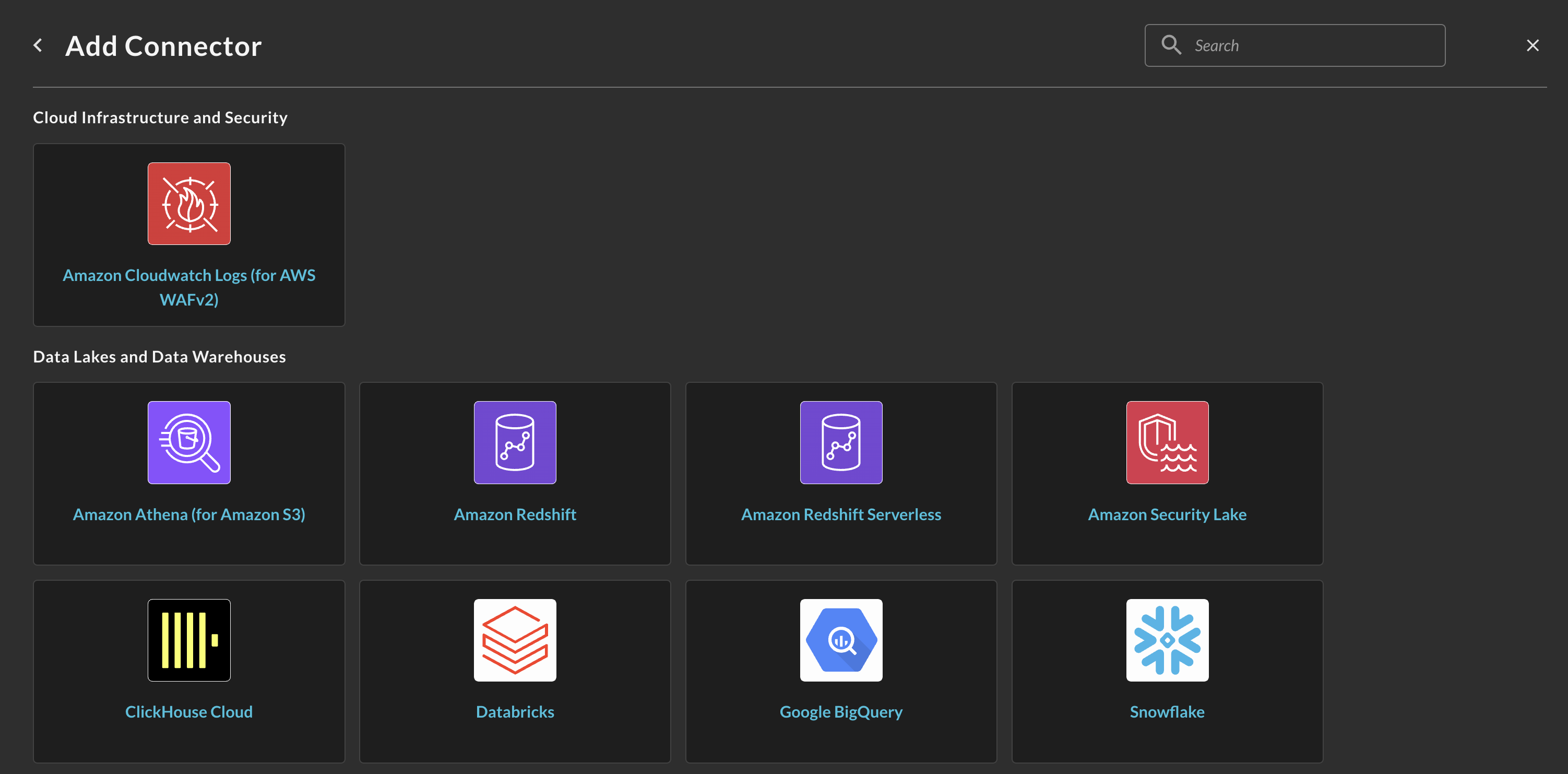

Navigate to the Connections page, select Add Connections, and select Google BigQuery from the

Data Lakes and Data Warehousescategory, optionally type BigQuery in the search bar as shown below (FIG. 10).

FIG. 10 - Locating the BigQuery Connector

-

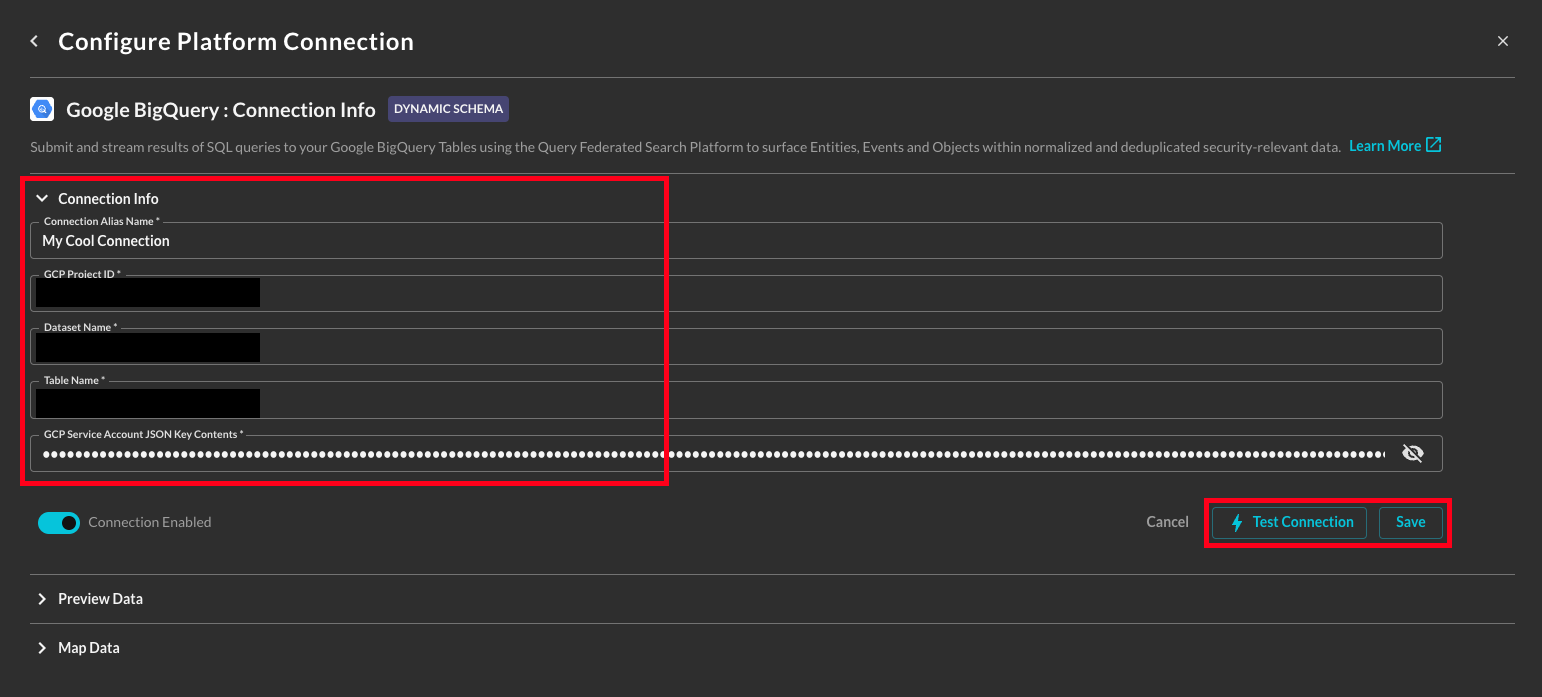

In the Connection Info section of the platform connector, provide the following details, select Save, and then Test Connection as shown below (FIG. 11).

- Connection Alias Name: A contextual name for the Connector, this is used to disambiguate multiple of the same connector.

- Platform Login Method: Leave the default value

Default Login. - GCP Project ID: The GCP Project ID, this is retrieved by selecting the Project selector in the GCP Console and copying the value in ID column.

- Dataset Name: The Dataset that contains the Table you are onboarding. Datasets are logical containers of several Tables and other BigQuery objects and are analogous to Databases in AWS Glue or Schemas in Snowflake.

- Table Name: The Table you are onboarding into the Query Federated Search Platform. Ensure this Table is in the Dataset you provided to avoid errors. You can also provide the name of a View here.

- GCP Service Account JSON Key Contents: Select all and paste the contents of your GCP IAM Service Account JSON access key into this section. Do not include any whitespace.

FIG. 11 - Providing Connection Info for Google BigQuery to the Query Platform Connector

- Execute the Configure Schema workflow to map your target table data into the QDM.

After completing the Configure Schema workflow, you are ready to perform Federated Searches against your BigQuery tables (or views).

Querying the Google BigQuery Connector

Querying your data completely depends on how your configured your schema mapping, as not all data sources are created the same, you can theoretically query across any number and combination of the currently supported Entities within the Query Federated Search platform.

- Hostnames (and Domains)

- IP Addresses (IPv4 and IPv6)

- MAC Addresses

- User Names

- Email Addresses

- URL Strings (and URIs)

- File Names

- Hashes (e.g., MD5, SHA1, SHA256, SSDEEP, VHASH, etc.)

- Process Names

- Resource IDs

- Ports

- Subnets (e.g.,

192.168.1.0/24or2001:0db8:85a3:0000::/64) - Command Lines (e.g.,

python3 encrypted.pyorssh user@ubuntu-ip-10-0-0-1) - Country Code (e.g.,

USorCN) - Process ID

- User Agent

- Common Weaknesses & Enumerations (CWE) IDs (e.g.,

CVE-2024-100251) - Common Vulnerabilities & Enumerations (CVE) IDs (e.g.,

CWE-79) - User Credential UID (e.g., AWS User Access Key ID -

AKIA0007EXAMPLE0002) - User ID

- Group Name

- Group ID

- Account Name

- Account ID

- Script Content

Resources

Refer to the previous sections' hyperlinks for more information on specific resources, services, tools and concepts. For further help with creating tables and performance tuning, consider some of the resources below.

- BigQuery Information

- Create and use tables

- Introduction to partitioned tables

- Introduction to clustered tables

- Introduction to views

- Introduction to loading data

Troubleshooting

- If you recently changed your permissions / Role in Query, log out and in again and clear your cache if you cannot Save or Test Connection.

- Verify you added the correct Permissions to the GCP IAM Role

- Verify you attached the Role to the Service Account

- Verify you provided the correct Service Account JSON access key to the BigQuery Platform Connector

- Verify you are using the correct GCP Project ID, or that you granted access to your Service Account to the Project

- Verify the name of your Dataset

- Verify that the Table or View is in the provided Dataset

- Verify that your Table or View has data in it

If you have exhausted the above Troubleshooting list, please contact your designated Query Sales Engineer or Customer Success Manager. If you are using a free tenant please contact Query Customer Success via the Support email in the Help section or via Intercom within your tenant.

Updated 3 months ago